MNIST Neural Networks

By Christian Reyes Santos.

As part of my CS 189 class at UC Berkeley, I embarked on a journey to explore the fascinating world of neural networks. This project involved building both a feed-forward fully connected network and a convolutional neural network (CNN) using only NumPy. No high-level libraries like TensorFlow or PyTorch—just pure, raw NumPy. The goal was to train these models on the MNIST dataset, a cornerstone dataset in the field of machine learning and computer vision.

What is the MNIST Dataset?

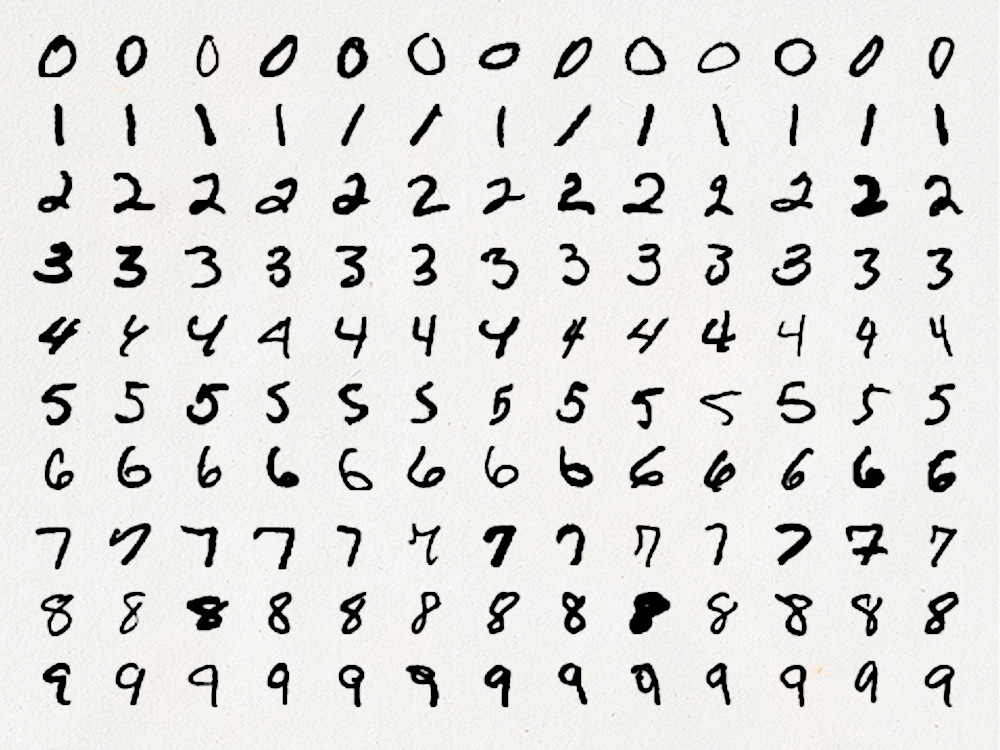

The MNIST (Modified National Institute of Standards and Technology) dataset is one of the most famous datasets in the machine learning community. It consists of 60,000 training images and 10,000 test images of handwritten digits, ranging from 0 to 9. Each image is a 28x28 grayscale image, making it a compact but rich dataset to work with.

The simplicity of MNIST, coupled with its complexity for handwritten digit recognition, makes it a perfect starting point for experimenting with machine learning algorithms. It's often used as the "Hello, World!" of neural networks.

The Challenge: Building a Neural Network with NumPy

In my project, I set out to build two types of neural networks:

- Feed-Forward Fully Connected Network

- Convolutional Neural Network (CNN)

The entire process was a deep dive into the fundamentals of neural networks, where I had to implement all aspects, from forward propagation to backpropagation, using only NumPy.

The Feed-Forward Fully Connected Network

A feed-forward network is the simplest type of artificial neural network. In this network, the data moves in one direction—from input nodes, through hidden nodes (if any), and finally to output nodes. There are no cycles or loops in the network.

Implementation Highlights:

- Network Architecture: The network consisted of an input layer, several hidden layers, and an output layer. Each layer was fully connected to the next, meaning every neuron in one layer was connected to every neuron in the next layer.

- Activation Functions: I used the ReLU (Rectified Linear Unit) function for the hidden layers and the softmax function for the output layer. ReLU introduces non-linearity into the network, and softmax is used for multi-class classification problems like digit recognition.

- Forward Propagation: In forward propagation, the input is passed through the network layer by layer to obtain the output. This involves matrix multiplications and the application of activation functions.

- Backpropagation: To train the network, I implemented backpropagation, a method for updating the weights of the network based on the error in the output. This involved calculating the gradient of the loss function with respect to each weight and adjusting the weights in the opposite direction of the gradient.

Results:

The Convolutional Neural Network (CNN)

CNNs are a class of deep neural networks that are particularly effective for processing grid-like data, such as images. They are designed to automatically and adaptively learn spatial hierarchies of features from input images.

Implementation Highlights:

- LeNet Architecture: I followed the classic LeNet architecture, which consists of two convolutional layers followed by pooling layers, and then fully connected layers leading to the output. LeNet is one of the earliest and simplest CNN architectures, making it a great learning tool.

- Convolutional Layers: These layers apply a set of filters (kernels) to the input image to produce a feature map. Each filter detects different features, such as edges or textures.

- Pooling Layers: After convolution, pooling layers reduce the dimensionality of the feature maps, which helps in reducing the computational load and also makes the network more robust to spatial variations.

- Fully Connected Layers: Finally, the output from the convolutional and pooling layers is fed into fully connected layers, which perform the final classification.

Results:

Note: I did not include a video because the video is too long.

Training the Networks

Training the networks on the MNIST dataset was a significant challenge but also a rewarding experience. Here’s how I approached it:

- Data Preprocessing: I normalized the pixel values of the images to a range of 0 to 1. This helps in faster convergence during training.

- Initialization: The weights of the network were initialized randomly. Proper initialization is crucial to avoid problems like vanishing or exploding gradients.

- Loss Function and Optimization: I used the cross-entropy loss function, which is common for classification tasks. For optimization, I implemented the gradient descent algorithm to update the weights.

- Validation: I monitored the validation accuracy to evaluate the performance of the models. Both networks achieved impressive results, with the CNN, in particular, achieving a validation accuracy of 96-97%.

Reflections and Learnings

Building these neural networks from scratch using only NumPy was an eye-opening experience. It provided me with a deep understanding of the inner workings of neural networks. Here are some key takeaways from this project:

- Understanding the Fundamentals: Implementing neural networks from scratch forced me to understand every detail, from matrix multiplications to the intricacies of backpropagation.

- Appreciation for High-Level Libraries: This project made me appreciate the high-level libraries like TensorFlow and PyTorch, which handle many of these complexities for us.

- The Power of CNNs: The convolutional neural network, with its ability to learn spatial hierarchies of features, demonstrated its power and effectiveness in image recognition tasks.

The MNIST dataset and the process of building neural networks from scratch have been foundational in my journey through machine learning and deep learning. This project not only honed my technical skills but also ignited a passion for exploring more complex and advanced architectures in the future.

Feel free to check out the code and further details on my GitHub repository.

Related Links:

- MNIST Dataset on Yann LeCun's website

- LeNet Architecture Paper

- LeNet Wikipedia Page

- CS 189 Course Website

- Convolutional Neural Networks

About the Author

Christian Reyes Santos is a passionate UC Berkeley graduate, exploring the realms of machine learning, computer science, and data science. Through hands-on projects and deep dives into theory, he continually seeks to push the boundaries of what's possible with the mind.